Deep Learning Weekly: Issue #202

Quantum AI, Global AI Index, Embedded Machine Learning, PyTorch Autograd Engine, Templated Deep Learning, and more

Hey folks,

This week in deep learning, we bring you the Global AI Index, PyTorch at Facebook, Embedded Machine Learning, large-scale training and a paper on knowledge distillation.

You may also enjoy Google’s Quantum AI campus, China’s latest language model, a tutorial on PyTorch autograd engine, a templated deep learning framework, and more!

As always, happy reading and hacking. If you have something you think should be in next week's issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

Unveiling our new Quantum AI campus

Google has unveiled a new Quantum AI campus aiming to build a useful quantum computer. This may have lots of interesting applications for AI research.

The Global AI Index is an index to benchmark nations on their level of investment, innovation and implementation of artificial intelligence.

AI Can Write Disinformation Now—and Dupe Human Readers

Georgetown researchers used text generator GPT-3 to write misleading tweets about climate change and foreign affairs, and scaringly people found the posts persuasive.

PyTorch builds the future of AI and machine learning at Facebook

Facebook announced that they are migrating all their production AI systems to PyTorch, and gives a nice overview of their 1,700 PyTorch-based inference models in full production.

Chinese AI lab challenges Google, OpenAI with a model of 1.75 trillion parameters

The Beijing Academy of Artificial Intelligence launched the latest version of Wudao, a deep learning language model that the lab dubbed as the world’s largest ever, with 1.75 trillion parameters.

China’s response to COVID showed the world how to make the most of A.I.

This article states that how China tackled the pandemic showed to the world how deep and specialized its health care data, algorithms, and A.I. research are.

Mobile & Edge

Introduction to Embedded Machine Learning

This course on Coursera teaches how to run deep neural networks and other complex ML algorithms on low-power devices like microcontrollers.

Improving federated learning with dynamic regularization

This article introduces dynamic regularization, a method used to train ML models in a federated learning context, i.e. when the data is decentralized among several nodes, typically IoT devices.

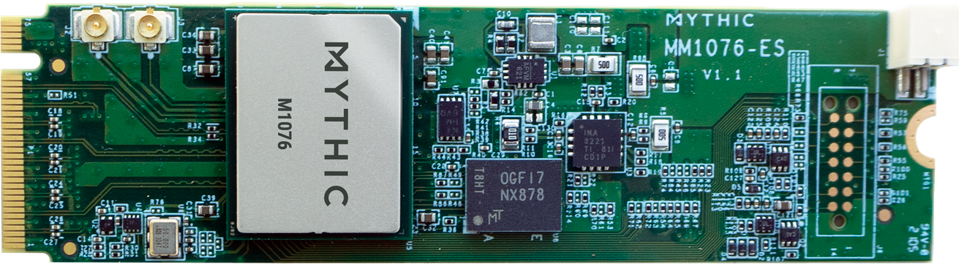

Mythic Launches Industry First Analog AI Chip

Having recently raised $75M in funding, Mythic AI launches the first chip based on analog computing. This bears watching, and they intend to submit results to industry’s performance benchmarks.

Learning

Hacker's guide to deep-learning side-channel attacks: code walkthrough

A security researcher at Google explains how to use deep learning to recover encryption keys from CPU power consumption traces.

Overview of PyTorch Autograd Engine

This post dives deep into how the PyTorch engine to compute gradients with automatic differentiation works. It provides very clear explanations on simple examples.

AI can now emulate text style in images in one shot — using just a single word

Facebook introduced TextStyleBrush, an AI research project that can copy the style of text in a photo using just a single word, enabling for example to edit and replace text in images.

Network devices overheat monitoring

In this post, OVHCloud, a cloud provider, details how they monitor network devices and avoid overheat shutdowns using ML models.

Libraries & Code

FairScale: a PyTorch extension library for high performance and large scale training

This library extends basic PyTorch capabilities and makes available the latest distributed training techniques in the form of composable modules and easy to use APIs.

IVY: the templated deep learning framework

Ivy is a templated deep learning framework which maximizes the portability of deep learning codebases by wrapping the functional APIs of existing frameworks. It currently supports Jax, TensorFlow, PyTorch, MXNet and Numpy.

Papers & Publications

Towards General Purpose Vision Systems

Abstract:

A special purpose learning system assumes knowledge of admissible tasks at design time. Adapting such a system to unforeseen tasks requires architecture manipulation such as adding an output head for each new task or dataset. In this work, we propose a task-agnostic vision-language system that accepts an image and a natural language task description and outputs bounding boxes, confidences, and text. The system supports a wide range of vision tasks such as classification, localization, question answering, captioning, and more. We evaluate the system's ability to learn multiple skills simultaneously, to perform tasks with novel skill-concept combinations, and to learn new skills efficiently and without forgetting.

A graph placement methodology for fast chip design

Abstract:

Chip floorplanning is the engineering task of designing the physical layout of a computer chip. Despite five decades of research1, chip floorplanning has defied automation, requiring months of intense effort by physical design engineers to produce manufacturable layouts. Here we present a deep reinforcement learning approach to chip floorplanning. In under six hours, our method automatically generates chip floorplans that are superior or comparable to those produced by humans in all key metrics, including power consumption, performance and chip area. To achieve this, we pose chip floorplanning as a reinforcement learning problem, and develop an edge-based graph convolutional neural network architecture capable of learning rich and transferable representations of the chip. As a result, our method utilizes past experience to become better and faster at solving new instances of the problem, allowing chip design to be performed by artificial agents with more experience than any human designer. Our method was used to design the next generation of Google’s artificial intelligence (AI) accelerators, and has the potential to save thousands of hours of human effort for each new generation. Finally, we believe that more powerful AI-designed hardware will fuel advances in AI, creating a symbiotic relationship between the two fields.

Does Knowledge Distillation Really Work?

Abstract:

Knowledge distillation is a popular technique for training a small student network to emulate a larger teacher model, such as an ensemble of networks. We show that while knowledge distillation can improve student generalization, it does not typically work as it is commonly understood: there often remains a surprisingly large discrepancy between the predictive distributions of the teacher and the student, even in cases when the student has the capacity to perfectly match the teacher. We identify difficulties in optimization as a key reason for why the student is unable to match the teacher. We also show how the details of the dataset used for distillation play a role in how closely the student matches the teacher -- and that more closely matching the teacher paradoxically does not always lead to better student generalization.