Deep Learning Weekly: Issue #204

NVIDIA’s new deep learning model for video conferencing, a paper on Visual Outlooker for Visual Recognition, and more

Hey folks,

This week in deep learning, we bring you NVIDIA's new deep learning model for video conferencing, A TinyML tutorial on a COVID health condition classifier, A bird call classifier on a Nano 33 BLE Sense and a paper on the Visual Outlooker for Visual Recognition.

You may also enjoy A new AI-based mapping service that offers routing advice to drivers and the like, A pose detection tutorial on Android using Google's On-device ML Kit, a comprehensive blog on Multi-Task Learning, Facebook AI Research's library for state-of-the-art detection and segmentation algorithms, and more!

As always, happy reading and hacking. If you have something you think should be in next week’s issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

How AI Research Is Reshaping Video Conferencing

Vid2Vid Cameo, one of the deep learning models behind the NVIDIA Maxine software development kit for video conferencing, uses generative adversarial networks to synthesize realistic talking-head videos using a single 2D image of a person.

DeepMind AGI paper adds urgency to ethical AI

A DeepMind paper that proposes how AGI can be achieved in a shorter time frame given the advancements in reinforcement learning and language models.

Using machine learning to build maps that give smarter driving advice

A team at QCRI partnered with a Doha-based taxi company called Karwa to build a new mapping service called QARTA that offers routing advice to drivers and delivery fleets.

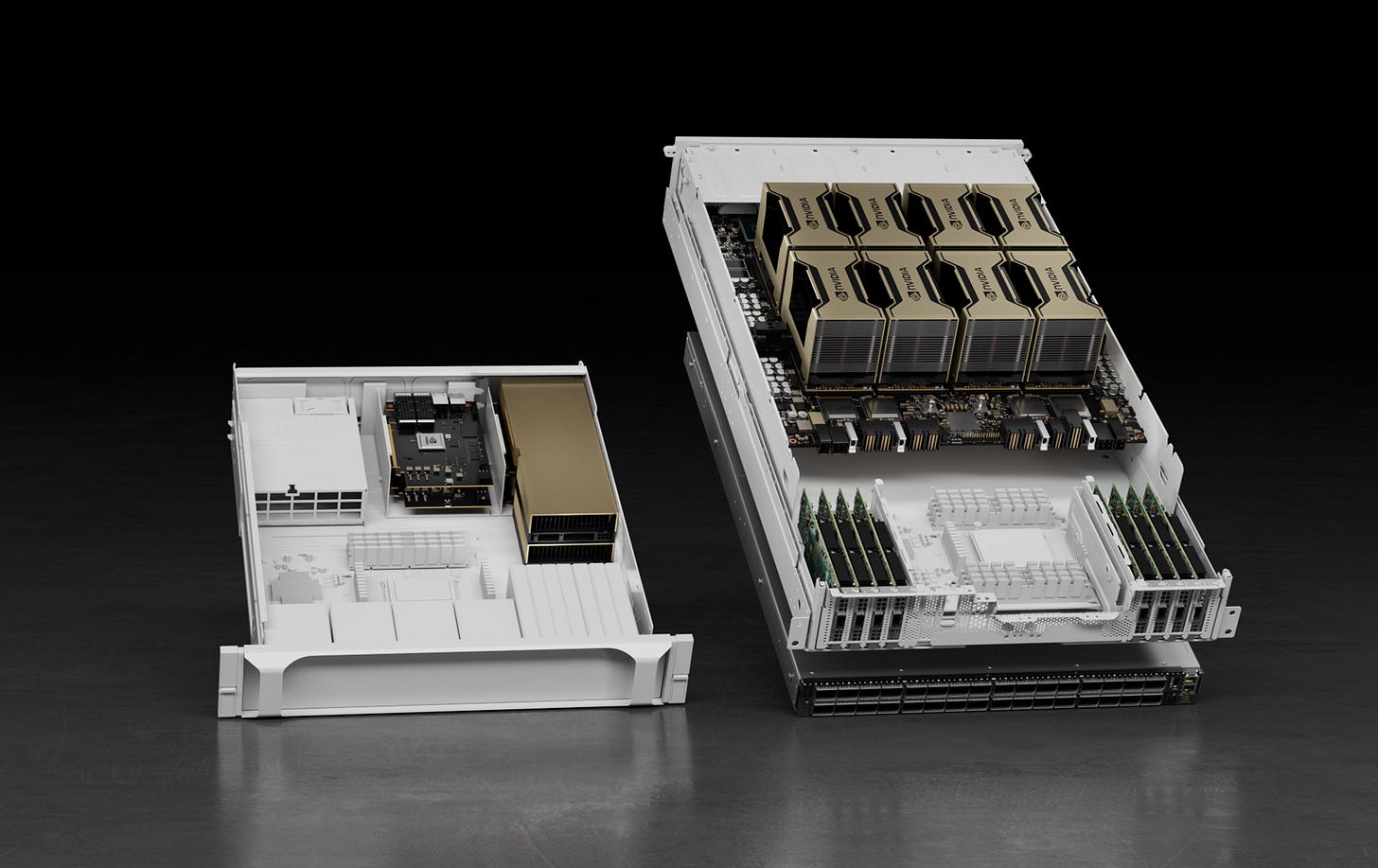

Nvidia is turbocharging its Nvidia HGX artificial intelligence supercomputing platform with some major enhancements to its compute, networking and storage performance.

Google's algorithm misidentified an engineer as a serial killer

Google’s knowledge graph falsely registers a software engineer as a serial killer in a Google search.

How PepsiCo uses AI to create products consumers don’t know they want

Leaders from Pepsi discuss numerous ways in which machine learning is utilized in their enterprise operations.

Mobile & Edge

Qualcomm’s Snapdragon 888 Plus ups the CPU and AI performance

Qualcomm announces the latest iteration of the Snapdragon 888 mobile processor which can run 32 trillion operations per seconds for AI tasks.

Covid Patient Health Assessing Device using Edge Impulse

A brief tutorial on a TinyML medical device using Edge Impulse to classify and analyze the Covid patient's health conditions.

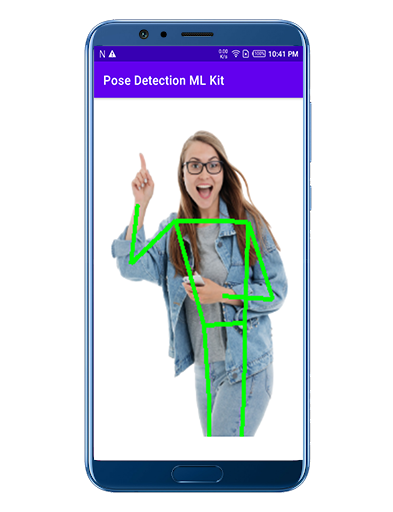

Pose Detection On Android using Google’s On-Device ML Kit

An article testing out pose detection on Android with the help of Google ML Kit’s Pose Detection API.

Use tinyML on the Nano 33 BLE Sense to classify different bird calls

A simple walkthrough showcasing a four-bird classifier using a Nano 33 BLE Sense.

Learning

How to Do Multi-Task Learning Intelligently

A comprehensive blog that discusses the motivation for Multi-Task Learning (MTL) as well as some use cases, difficulties, and recent algorithmic advances.

Improving Breast Cancer Detection in Ultrasound Imaging Using AI

An introduction to a paper on designing a weakly supervised deep neural network whose working resembles the diagnostic procedure of radiologists.

A Dataset for Studying Gender Bias in Translation

Google releases the Translated Wikipedia Biographies dataset, which can be used to evaluate the gender bias of translation models.

Improving Genomic Discovery with Machine Learning

An article highlighting machine learning models that can be used to quickly phenotype large cohorts and increase statistical power for genome-wide association studies.

Fine-Tuning Transformer Model for Invoice Recognition

A step-by-step guide to fine-tuning Microsoft’s recently released Layout LM model on an annotated custom dataset that includes French and English invoices.

Libraries & Code

Detectron2: a library that provides SOTA computer vision algorithms

Facebook AI Research's next generation library that provides state-of-the-art detection and segmentation algorithms.

Unity-Technologies/ml-agents: Unity Machine Learning Agents Toolkit

An open-source project that enables games and simulations to serve as environments for training intelligent agents.

google-research/deeplab2: DeepLab2 is a TensorFlow library for deep labeling

A TensorFlow library for deep labeling, aiming to provide a unified and state-of-the-art TensorFlow codebase for dense pixel labeling tasks including different segmentations.

Papers & Publications

VOLO: Vision Outlooker for Visual Recognition

Abstract:

Visual recognition has been dominated by convolutional neural networks (CNNs) for years. Though recently the prevailing vision transformers (ViTs) have shown great potential of self-attention based models in ImageNet classification, their performance is still inferior to latest SOTA CNNsif no extra data are provided. In this work, we aim to close the performance gap and demonstrate that attention-based models are indeed able to outperform CNNs. We found that the main factor limiting the performance of ViTs for Im-geNet classification is their low efficacy in encoding fine-level features into the token representations. To resolve this, we introduce a novel outlook attention and present a simple and general architecture, termed Vision Outlooker (VOLO). Unlike self-attention that focuses on global depen-dency modeling at a coarse level, the outlook attention aims to efficiently encode finer-level features and contexts into tokens, which are shown to be critical for recognition per-formance but largely ignored by the self-attention. Experiments show that our VOLO achieves 87.1% top-1 accuracy on ImageNet-1K classification, being the first model exceeding 87% accuracy on this competitive benchmark, without using any extra training data. In addition, the pre-trained VOLO transfers well to downstream tasks, such as semantic segmentation. We achieve 84.3% mIoU score on the cityscapes validation set and 54.3% on the ADE20K validation set.

Abstract:

The vision community is witnessing a modeling shift from CNNs to Transformers, where pure Transformer architectures have attained top accuracy on the major video recognition benchmarks. These video models are all built on Transformer layers that globally connect patches across the spatial and temporal dimensions. In this paper, we instead advocate an inductive bias of locality in video Transformers, which leads to a better speed-accuracy trade-off compared to previous approaches which compute self-attention globally even with spatial-temporal factorization. The locality of the proposed video architecture is realized by adapting the Swin Transformer designed for the image domain, while continuing to leverage the power of pre-trained image models. Our approach achieves state-of-the-art accuracy on a broad range of video recognition benchmarks, including on action recognition (84.9 top-1 accuracy on Kinetics-400 and 86.1 top-1 accuracy on Kinetics-600 with ~20x less pre-training data and ~3x smaller model size) and temporal modeling (69.6 top-1 accuracy on Something-Something v2).