Deep Learning Weekly: Issue #205

The AI pair programmer, a next-generation simulation platform for AI agents, natural language prompting and more

Hey folks,

This week in deep learning, we bring you an AI pair programmer, WHO's first AI for healthcare report, a comprehensive article on natural language prompting and democratizing conversational AI systems through new datasets and research.

You may also enjoy machine learning models that learned to simulate atomic clusters, a next-generation simulation platform for AI agents, class activation maps for your Pytorch models, a paper on the extension of CLIP that handles audio, and more!

As always, happy reading and hacking. If you have something you think should be in next week’s issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

Introducing GitHub Copilot: your AI pair programmer

The technical preview of GitHub Copilot, a new AI pair programmer that helps you write better code via OpenAI Codex, was just launched.

Physicists Teach AI to Simulate Atomic Clusters

Researchers from the University of Colorado, Boulder trained a neural network and a random forest to predict energy bands from 16-atom arrangements, using skillfully simulated training data.

WHO report on AI in healthcare is a mixed bag of horror and delight

The World Health Organization issued its first artificial intelligence in healthcare report, highlighting inclusivity, explainability and other relevant matters.

Habitat 2.0: Training home assistant robots with faster simulation and new benchmarks

Facebook announces a next-generation simulation platform that lets AI researchers teach machines not only to navigate through photo-realistic 3D virtual environments but also to interact with objects just as they would in common space.

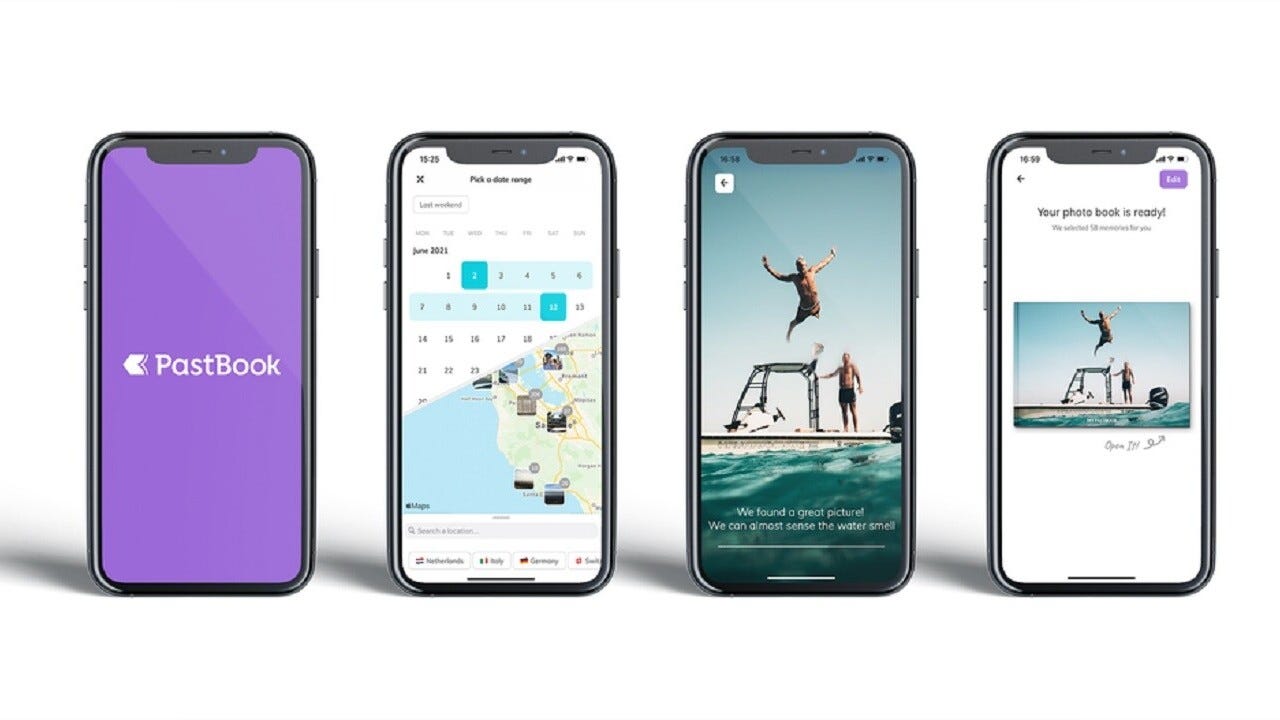

Pastbook launches a Google Photos-esque AI-powered photobook app

PastBook, a startup out of Amsterdam, is bringing its web-to-print, AI-powered photobook service to iOS.

TikTok parent ByteDance has begun selling the video app’s AI to other clients

ByteDance, the Beijing-based parent company of video sharing app TikTok has started selling TikTok’s AI to other companies.

Attackers use ‘offensive AI’ to create deepfakes for phishing campaigns

Researchers explore the constantly evolving organizational threats, such as deepfake impersonations and adversarial attacks, that artificial intelligence has brought to the table.

Mobile & Edge

Deep Dive: Sony Spresense with Edge Impulse (Part 1)

A technical blog detailing Sony Spresense’s features which were designed with TinyML and IoT applications in mind.

Image Labeling In Android using Google’s On-Device ML Kit

A short article on how to implement ML Kit’s on-device Image Labeling API on Android.

Edge Impulse workshop on the 27th of July

A 90-minute hands-on workshop on smart sensor analysis, image processing, and end-to-end TinyML models.

Learning

Prompting: Better Ways of Using Language Models for NLP Tasks

A comprehensive article discussing the recent progress of natural-language prompts, soft prompts, and in-context learning.

Democratizing conversational AI systems through new data sets and research

Facebook presents a few novel techniques which are effective at scaling NLU models to support more diverse use cases in more languages.

Sentence Transformers in the Hugging Face Hub

Hugging Face showcases the new features of Sentence Transformers, a framework for sentence, paragraph and image embeddings.

Quickly Training Game-Playing Agents with Machine Learning

An article discussing Google’s ML-based system that game developers can use to quickly and efficiently train game-testing agents.

Libraries & Code

frgfm/torch-cam: Class activation maps for your PyTorch models

A simple way to leverage the class-specific activation of convolutional layers in PyTorch.

blue-yonder/tsfresh: Automatic extraction of relevant features from time series:

A library that automates feature extraction via scalable hypothesis tests and comes with a robust feature selection algorithm.

apache/submarine: Submarine is Cloud Native Machine Learning Platform.: a Deep Learning Optimization Library

An end-to-end machine learning platform that allows data scientists to create end-to-end machine learning workflows.

Papers & Publications

AudioCLIP: Extending CLIP to Image, Text and Audio

Abstract:

In the past, the rapidly evolving field of sound classification greatly benefited from the application of methods from other domains. Today, we observe the trend to fuse domain-specific tasks and approaches together, which provides the community with new outstanding models.

In this work, we present an extension of the CLIP model that handles audio in addition to text and images. Our proposed model incorporates the ESResNeXt audio-model into the CLIP framework using the AudioSet dataset. Such a combination enables the proposed model to perform bimodal and unimodal classification and querying, while keeping CLIP's ability to generalize to unseen datasets in a zero-shot inference fashion.

AudioCLIP achieves new state-of-the-art results in the Environmental Sound Classification (ESC) task, out-performing other approaches by reaching accuracies of 90.07% on the UrbanSound8K and 97.15% on the ESC-50 datasets. Further it sets new baselines in the zero-shot ESC-task on the same datasets 68.78% and 69.40%, respectively).

Finally, we also assess the cross-modal querying performance of the proposed model as well as the influence of full and partial training on the results. For the sake of reproducibility, our code is published.

R-Drop: Regularized Dropout for Neural Networks

Abstract:

Dropout is a powerful and widely used technique to regularize the training of deep neural networks. In this paper, we introduce a simple regularization strategy upon dropout in model training, namely R-Drop, which forces the output distributions of different sub models generated by dropout to be consistent with each other. Specifically, for each training sample, R-Drop minimizes the bidirectional KL-divergence between the output distributions of two sub models sampled by dropout. Theoretical analysis reveals that R-Drop reduces the freedom of the model parameters and complements dropout. Experiments on 5 widely used deep learning tasks (18 datasets in total), including neural machine translation, abstractive summarization, language understanding, language modeling, and image classification, show that R-Drop is universally effective. In particular, it yields substantial improvements when applied to fine-tune large-scale pre-trained models, e.g., ViT, RoBERTa-large, and BART, and achieves state-of-the-art (SOTA) performances with the vanilla Transformer model on WMT14 English → German translation (30.91 BLEU) and WMT14 English → French translation ( 43.95 BLEU), even surpassing models trained with extra large-scale data and expert-designed advanced variants of Transformer models.