Deep Learning Weekly: Issue #211

The new mobile AI chip for Google’s Pixel, identifying birds by sound, what happened to IBM Watson, a mini version of DALL-E image generation, big funding for AI startups, and more

Hey folks,

This week in deep learning, we bring you Google’s custom-built chip, a review of IBM’s Watson, language modeling applied for understanding proteins, and a large funding round for AI startup Dataiku.

You may also enjoy AI top researchers’ Turing lecture, TensorFlow’s latest release, a JAX tutorial, a discussion on the future of AI computing and more!

As always, happy reading and hacking. If you have something you think should be in next week's issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

OpenAI warns AI behind GitHub’s Copilot may be susceptible to bias

One month after launching Copilot, a service that provides suggestions for whole lines of code inside development environments, OpenAI warns about its significant limitations, in particular biases coming from the training data.

AI Doesn’t Have to Be Too Complicated or Expensive for Your Business

This article states that to deploy AI in production, companies should focus more on getting good data, and on using MLOps tools needed to support the data collection process.

Accelerating Quadratic Optimization Up to 3x With Reinforcement Learning

A research team proposes RLQP, an accelerated solver based on deep reinforcement learning to speed up quadratic programs’ solving. This could have broad implications in machine learning.

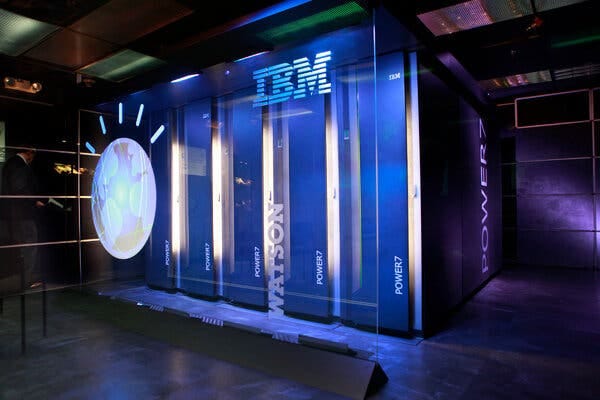

What Ever Happened to IBM’s Watson ?

After beating the best “Jeopardy!” player a decade ago, IBM’s AI was supposed to transform industries. Now, IBM has settled on a humbler vision for Watson.

In this lecture, Yoshua Bengio, Yann LeCun, and Geoffrey Hinton describe the origins of deep learning, a few of the more recent advances, and discuss some of the future challenges.

Dataiku Series E: Unleashing Everyday AI

Dataiku, a french company building a data science and ML platform, announced a $400 million Series E funding round. It will be used to unleash Everyday AI, its main product, within exponentially more organizations around the world.

Mobile & Edge

Google Tensor debuts on the new Pixel 6 this fall

Google will release its Pixel 6 phone next fall, with a lot of new features, from a completely revamped camera system to speech recognition and much more, powered by Tensor, a custom-built chip.

Accelerating Machine Learning Model Inference on Google Cloud Dataflow with NVIDIA GPUs

With Dataflow GPU, built by Google Cloud and Nvidia, users can now leverage the power of NVIDIA GPUs in their machine learning inference workflows. This post shows how to access these performance benefits with BERT.

Besieging GPU: Who Will Be the Next Overlord of AI Computing After NVIDIA?

This article introduces several AI chip companies with unique technologies able to threaten the domination of GPUs when it comes to AI computing. Will one of those companies replace Nvidia in the coming years?

BirdNET: The Easiest Way To Identify Birds By Sound

The Cornell Lab of Ornithology released BirdNet, a research platform that aims at recognizing birds by sound at scale. It supports various hardware and operating systems such as Arduino microcontrollers, Raspberry Pis, smartphones, web browsers or workstation PCs.

Learning

Diffusion models are a new type of generative models that are flexible enough to learn any arbitrarily complex data distribution. It has been shown recently that diffusion models can generate high-quality images with state-of-the-art performance.

Building Medical 3D Image Segmentation Using Jupyter Notebooks from the NGC Catalog

This post shows how to use an image segmentation notebook to predict brain tumors in MRI images.

Is MLP-Mixer a CNN in Disguise?

This post looks at the MLP Mixer architecture, a new architecture which claims to have competitive performance with state-of-the-art models for image classification without using convolutions or attention.

A series of tutorials to discover TensorFlow and JAX, meant for everyone, from novice to advanced. It begins with the fundamentals, before diving deep into advanced uses of those libraries.

Better computer vision models by combining Transformers and convolutional neural networks

Facebook developed a new computer vision model called ConViT, which combines two widely used AI architectures - convolutional neural networks (CNNs) and Transformer-based models - in order to overcome some important limitations of each approach on its own.

Libraries & Code

PIX: an image processing library in JAX

PIX is DeepMind’s library built on top of JAX with the goal of providing image processing functions and tools in a way that they can be optimised and parallelised using JAX.

DALL·E-mini is an AI model that generates images from any prompt you give, based on OpenAI’s DALL-E neural network architecture.

TensorFlow 2.6.0 has been released, with major features and improvements: Keras has been split into a separate package, useful updates in TensorFlow Lite for Android, bug solving and much more.

Papers & Publications

Language models enable zero-shot prediction of the effects of mutations on protein function

Abstract:

Modeling the effect of sequence variation on function is a fundamental problem for understanding and designing proteins. Since evolution encodes information about function into patterns in protein sequences, unsupervised models of variant effects can be learned from sequence data. The approach to date has been to fit a model to a family of related sequences. The conventional setting is limited, since a new model must be trained for each prediction task. We show that using only zero-shot inference, without any supervision from experimental data or additional training, protein language models capture the functional effects of sequence variation, performing at state-of-the-art.

Pretrained Transformers as Universal Computation Engines

Abstract:

We investigate the capability of a transformer pretrained on natural language to generalize to other modalities with minimal finetuning -- in particular, without finetuning of the self-attention and feedforward layers of the residual blocks. We consider such a model, which we call a Frozen Pretrained Transformer (FPT), and study finetuning it on a variety of sequence classification tasks spanning numerical computation, vision, and protein fold prediction. In contrast to prior works which investigate finetuning on the same modality as the pretraining dataset, we show that pretraining on natural language can improve performance and compute efficiency on non-language downstream tasks. Additionally, we perform an analysis of the architecture, comparing the performance of a random initialized transformer to a random LSTM. Combining the two insights, we find language-pretrained transformers can obtain strong performance on a variety of non-language tasks.

Efficient Visual Pretraining with Contrastive Detection

Abstract:

Self-supervised pretraining has been shown to yield powerful representations for transfer learning. These performance gains come at a large computational cost however, with state-of-the-art methods requiring an order of magnitude more computation than supervised pretraining. We tackle this computational bottleneck by introducing a new self-supervised objective, contrastive detection, which tasks representations with identifying object-level features across augmentations. This objective extracts a rich learning signal per image, leading to state-of-the-art transfer accuracy on a variety of downstream tasks, while requiring up to 10x less pretraining. In particular, our strongest ImageNet-pretrained model performs on par with SEER, one of the largest self-supervised systems to date, which uses 1000x more pretraining data. Finally, our objective seamlessly handles pretraining on more complex images such as those in COCO, closing the gap with supervised transfer learning from COCO to PASCAL.