Deep Learning Weekly: Issue #214

Tesla’s state-of-the-art Dojo chip, Jurassic-1 to challenge GPT-3, Apple’s Private on-device machine learning, YOLOv5 matches GPU performance on CPU, how an AI unicorn was built, and more

Hey folks,

This week in deep learning, we bring you Tesla’s D1 Dojo chip, the Center for Research on Foundation models, a vision on the future of AI, and a paper on ETA prediction using graph neural networks.

You may also enjoy AI21 Studio’s language models, introductory materials about reinforcement learning, a framework for large-scale model training, anomalous data detection and more!

As always, happy reading and hacking. If you have something you think should be in next week's issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

Announcing AI21 Studio and Jurassic-1 Language Models

AI21 Labs, an AI company based in Tel Aviv, trains a massive language model to rival OpenAI’s GPT-3. AI21 Labs has launched a developer platform where users can use state-of-the-art language models to build their own applications and services.

How will we use artificial intelligence in 20 years’ time?

In this podcast, Kai-Fu Lee, one of the most prominent figures in China’s AI space, tells how he thinks AI will have changed the world in twenty years’ time.

How to build an AI unicorn in 6 years

The CEO of Tractable, an AI company building products helping insurers appraise damage on pictures, explains his company’s journey from a startup to a unicorn.

Introducing the Center for Research on Foundation Models (CRFM)

Stanford has launched a new research center studying the technical principles and societal impact of foundation models, which are models trained on broad data at scale such that they can be adapted to a wide range of downstream tasks.

Discovering Anomalous Data with Self-Supervised Learning

Google introduces a novel framework that makes use of recent progress on self-supervised learning to perform anomaly detection. This method could be extended under the scenario where training data is truly unlabeled.

This AI Helps Detect Wildlife Health Issues in Real Time

Researchers at UC Davis built an early detection system that uses AI to classify admissions to wildlife rehabilitation centers when a disease is spreading, in the hope of sending warnings about growing problems among marine birds and many other kinds of animals.

Mobile & Edge

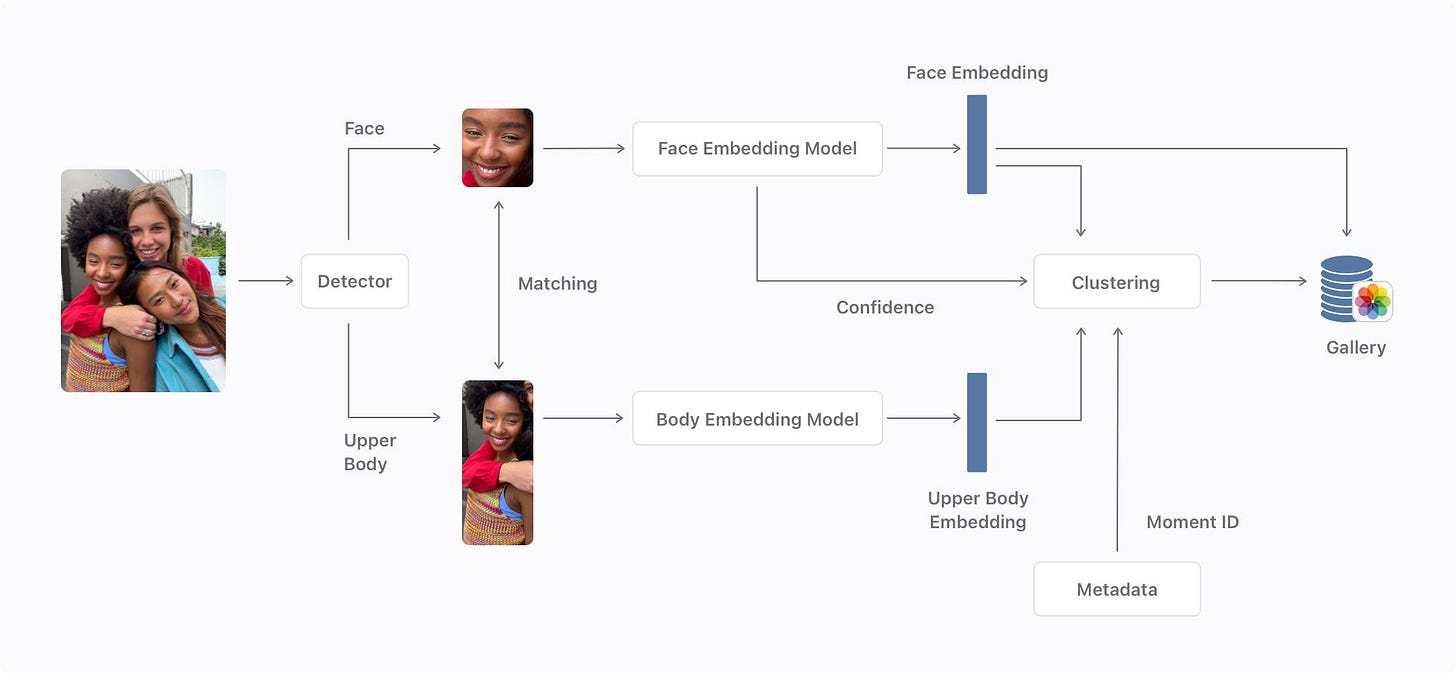

Recognizing People in Photos Through Private On-Device Machine Learning

Apple describes how it built a system able to recognize people in pictures taken by users, while meeting the following constraints: the system must run on-device, guarantee the users’ privacy and ensure equity in the results.

This post dives into Tesla’s D1 Dojo chip built to perform AI calculations, whose inner workings have been revealed recently, and shows how it could outperform existing state-of-the-art systems.

YOLOv5 on CPUs: Sparsifying to Achieve GPU-Level Performance and a Smaller Footprint

Neural Magic describes how they are able to reach GPU-Class Performance on CPUs for inference of YOLOv5, an object detection model, by using state-of-the-art pruning and quantization techniques.

Samsung Has Its Own AI-Designed Chip. Soon, Others Will Too

Synopsys, which sells software for designing semiconductors to dozens of companies, is adding artificial intelligence to its arsenal to automate the insanely complex and subtle process of designing cutting-edge computer chips.

Learning

Reinforcement Learning Course Materials

This Github repository contains all lecture notes, tutorials as well as online videos for the reinforcement learning course hosted by Paderborn University. Source code for the entire course material is open.

How DeepMind's Generally Capable Agents Were Trained

This post is a high-level summary of DeepMind’s paper describing how to train agents that can successfully play games as complex as hide-and-seek or capture-the-flag without even having seen these games before.

A Gentle Introduction to Graph Neural Networks

A very clear and illustrated introduction to Graph Neural Networks, detailing the neural networks architectures and the potential applications.

In this lecture, Jeremy Howard, an Australian entrepreneur, tells his journey in the world of deep learning, mixing theory with practice in many fields.

Mapping Africa’s Buildings with Satellite Imagery

This post describes how Google built an open dataset of all buildings in Africa by applying state-of-the-art building detection models to satellite imagery.

Libraries & Code

This post provides a PyTorch implementation of the paper PonderNet: Learning to Ponder, introducing a new algorithm that learns to adapt the amount of computation based on the complexity of the problem at hand.

100+ Open Audio and Video Datasets

In this post, Twine, a company specialized in creating datasets for AI models, has put together a list of 100+ open audio and video datasets, with their main characteristics (number of recordings, number of participants, languages, …).

Mistral: a framework for transparent and accessible large-scale language model training

Mistral is a framework built with Hugging Face to facilitate large-scale language model training. It includes tools for incorporating new pre-training datasets, various schemes for single node and distributed training, and scripts for evaluation.

Papers & Publications

Vision Transformer with Progressive Sampling

Abstract:

Transformers with powerful global relation modeling abilities have been introduced to fundamental computer vision tasks recently. As a typical example, the Vision Transformer (ViT) directly applies a pure transformer architecture on image classification, by simply splitting images into tokens with a fixed length, and employing transformers to learn relations between these tokens. However, such naive tokenization could destruct object structures, assign grids to uninterested regions such as background, and introduce interference signals. To mitigate the above issues, in this paper, we propose an iterative and progressive sampling strategy to locate discriminative regions. At each iteration, embeddings of the current sampling step are fed into a transformer encoder layer, and a group of sampling offsets is predicted to update the sampling locations for the next step. The progressive sampling is differentiable. When combined with the Vision Transformer, the obtained PS-ViT network can adaptively learn where to look. The proposed PS-ViT is both effective and efficient. When trained from scratch on ImageNet, PS-ViT performs 3.8% higher than the vanilla ViT in terms of top-1 accuracy with about 4× fewer parameters and 10× fewer FLOPs. Code is available at this https URL.

ETA Prediction with Graph Neural Networks in Google Maps

Abstract:

Travel-time prediction constitutes a task of high importance in transportation networks, with web mapping services like Google Maps regularly serving vast quantities of travel time queries from users and enterprises alike. Further, such a task requires accounting for complex spatiotemporal interactions (modelling both the topological properties of the road network and anticipating events -- such as rush hours -- that may occur in the future). Hence, it is an ideal target for graph representation learning at scale. Here we present a graph neural network estimator for estimated time of arrival (ETA) which we have deployed in production at Google Maps. While our main architecture consists of standard GNN building blocks, we further detail the usage of training schedule methods such as MetaGradients in order to make our model robust and production-ready. We also provide prescriptive studies: ablating on various architectural decisions and training regimes, and qualitative analyses on real-world situations where our model provides a competitive edge. Our GNN proved powerful when deployed, significantly reducing negative ETA outcomes in several regions compared to the previous production baseline (40+% in cities like Sydney).