Deep Learning Weekly: Issue #215

TensorFlow Similarity, faster quantized inference with XNNPACK, an introduction to generative spoken language models, lettuce-picking robots, transformer-based 3D dance generation, and more

Hey folks,

This week in deep learning, we bring you Tensorflow Similarity, faster quantized inference with XNNPACK, the world's first 5G and AI enabled drone platform and a paper on transformer-based 3D dance generation.

You may also enjoy Intel's advancements in the area of multiagent evolutionary reinforcement learning, on-device image recognition resources for ESP32, a technical introduction to generative spoken language models, a paper on fastformers, and more!

As always, happy reading and hacking. If you have something you think should be in next week’s issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

Introducing TensorFlow Similarity

Tensorflow releases the first version of a python package designed to make it easy and fast to train similarity models.

Researchers lay the groundwork for an AI hive mind

Intel’s advances in the area of multi-agent evolutionary reinforcement learning (MERL) is a step towards what one may call a non-sentient hive mind.

Betting On Horses with No-Code AI

Akkio's platform was able to build a money-making model with 700 rows of training data consisting of the history of horses scheduled to run at Saratoga Race Course.

Researchers have created a new technique to stop adversarial attacks

A new technique developed by researchers at Carnegie Mellon University and the KAIST Cybersecurity Research Center employs unsupervised learning to address some of the challenges of current methods used to detect adversarial attacks.

Everyone will be able to clone their voice in the future

Cloning your voice using artificial intelligence is simultaneously tedious and simple: hallmarks of a technology that’s just about mature and ready to go public.

Brain-Inspired AI Will Enable Future Medical Implants

In a new study led by researchers from TU Dresden, researchers created a system made from networks of tiny polymer fibers that, when submerged in a solution meant to replicate the inside of the human body, function as organic transistors.

Mobile & Edge

Faster Quantized Inference with XNNPACK

Tensorflow extends the XNNPACK backend to quantized models with, on average across computer vision models, 30% speedup on ARM64 mobile phones, 5X speedup on x86-64 laptop and desktop systems, and 20X speedup for in-browser inference.

Qualcomm Flight RB5 5G Platform — the world's first 5G- and AI-enabled drone platform

Designed for small, unmanned aircraft systems, the Qualcomm Flight RB5 Platform combines low-power computing and camera systems with AI, and other connectivity features to bring together advanced imaging capabilities and drone-to-drone communication.

Sniffing Out Industrial Chemicals Using tinyML

A compact and intelligent VOC detection system based on a trained tinyML model deployed to a low-power embedded device.

A handful of resources for using the ESP32 with multiple different camera modules to run image recognition models on-device.

Lettuce-Picking Robots Are Transforming Farming on a 'Hands-Free' Farm

In Australia, innovators have just presented the country's first fully automated AI-enabled farm spanning 1,900 hectares.

Learning

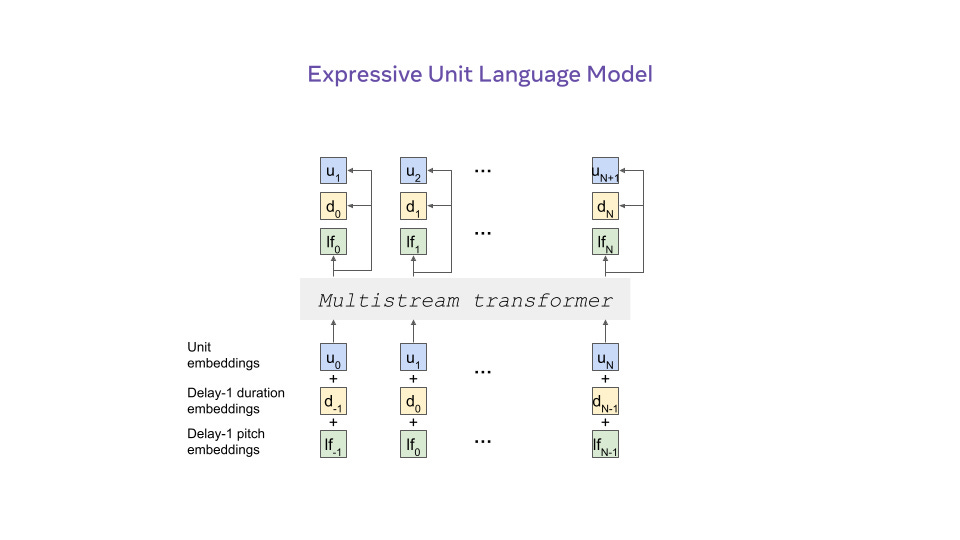

Textless NLP: Generating expressive speech from raw audio

A technical introduction to Generative Spoken Language Model (GSLM), the first high-performance NLP model that incorporates the full range of expressivity of oral language without application restrictions.

Has AI found a new Foundation?

An in-depth review of Stanford’s recent paper on foundation models.

How Big Data Carried Graph Theory Into New Dimensions

Researchers are turning to the mathematics of higher-order interactions to better model the complex connections within their data.

Introducing Optimum: The Optimization Toolkit for Transformers at Scale

A comprehensive introduction to Optimum, an optimization toolkit that provides performance optimization tools targeting efficient AI hardware and built-in collaboration with hardware partners.

Libraries & Code

CARLA: A Python Library to Benchmark Algorithmic Recourse and Counterfactual Explanation Algorithms

A python library to benchmark counterfactual explanation and recourse models.

Cockpit: A Practical Debugging Tool for Training Deep Neural Networks

A visual and statistical debugger specifically designed for deep learning.

A set of example templates to accelerate the delivery of custom ML solutions to production so you can get started quickly without having to make too many design choices.

Papers & Publications

AI Choreographer: Music Conditioned 3D Dance Generation with AIST++

Abstract:

We present AIST++, a new multi-modal dataset of 3D dance motion and music, along with FACT, a Full-Attention Cross-modal Transformer network for generating 3D dance motion conditioned on music. The proposed AIST++ dataset contains 5.2 hours of 3D dance motion in 1408 sequences, covering 10 dance genres with multi-view videos with known camera poses -- the largest dataset of this kind to our knowledge. We show that naively applying sequence models such as transformers to this dataset for the task of music conditioned 3D motion generation does not produce satisfactory 3D motion that is well correlated with the input music. We overcome these shortcomings by introducing key changes in its architecture design and supervision: FACT model involves a deep cross-modal transformer block with full-attention that is trained to predict N future motions. We empirically show that these changes are key factors in generating long sequences of realistic dance motion that are well-attuned to the input music. We conduct extensive experiments on AIST++ with user studies, where our method outperforms recent state-of-the-art methods both qualitatively and quantitatively.

Fastformer: Additive Attention Can Be All You Need

Abstract:

Transformer is a powerful model for text understanding. However, it is inefficient due to its quadratic complexity to input sequence length. Although there are many methods on Transformer acceleration, they are still either inefficient on long sequences or not effective enough. In this paper, we propose Fastformer, which is an efficient Transformer model based on additive attention. In Fastformer, instead of modeling the pair-wise interactions between tokens, we first use additive attention mechanism to model global contexts, and then further transform each token representation based on its interaction with global context representations. In this way, Fastformer can achieve effective context modeling with linear complexity. Extensive experiments on five datasets show that Fastformer is much more efficient than many existing Transformer models and can meanwhile achieve comparable or even better long text modeling performance.