Deep Learning Weekly: Issue #226

TorchGeo, OpenFold, Earth-2, DCGANs, PyTorch Lightning v1.5, and more

Hey folks,

This week in deep learning, we bring you Jina.ai’s funding round, a tutorial on DCGANs, a reinforcement learning library, and a new paper on AlphaZero.

You may also enjoy an article about foundation models, a report on AI for People & Planet, an implementation of Transformers from-scratch, and more!

As always, happy reading and hacking. If you have something you think should be in next week's issue, find us on Twitter: @dl_weekly.

Until next week!

Industry

NVIDIA Creates Framework for AI to Learn Physics

NVIDIA announced Modulus, an AI framework that provides a customizable and easy-to-adopt physics-based toolkit to build neural network models. It has applications in various fields such as climate science or protein engineering.

Jina.ai raises $30M for its for its neural search platform

Berlin-based Jina.ai, an open source startup that uses neural search to help its users find information in their unstructured data, announced that it has raised a $30 million Series A funding round.

‘Foundation models’ may be the future of AI. They’re also deeply flawed

Foundation models like GPT-3 and BERT have acquired outsized influence. This emphasizes the need for stronger regulations before the harms of individual models are perpetuated across application ecosystems.

Announcing PyTorch Lightning v1.5

Latest version of PyTorch Lightning, a framework to simplify coding complex networks, includes many exciting features including Fault-Tolerant Training, LightningLite, Loops Customization, Lightning Tutorials, and many more.

University College London has published its insights about future uses of AI, encapsulating the belief that the purpose of research and innovation in AI is ultimately to benefit people and societies around the world, and to make a positive impact on the planet.

Mobile & Edge

Seeing into our future with Nvidia’s Earth-2

Nvidia revealed plans to build the world’s most powerful AI supercomputer dedicated to predicting climate change. Named Earth-2, the system would create a digital twin of Earth in Omniverse, Nvidia’s platform for 3D-simulation.

Machine learning on microcontrollers enables AI

This discussion highlights the benefits, but also the limits, of running today’s AI systems on smaller devices like microprocessors. This will enable running inferences on the edge, where the data is generated.

There is now a superabundance of start-ups taking on the challenge of moving neural network-based machine learning from the cloud data center to embedded systems in the field. For the first time in a couple of decades, hardware starts to be at the center of attention.

Learning

Deep Convolutional Generative Adversarial Network (DCGAN)

A beginner-level tutorial for generating images of handwritten digits using a Deep Convolutional Generative Adversarial Network (DCGAN), the first GAN introduced to generate images.

This blog post outlines theoretical and practical insights about how neural networks are able to generalize to unseen data. It can be summarized in one sentence: “Memorization is the first step towards generalization!”

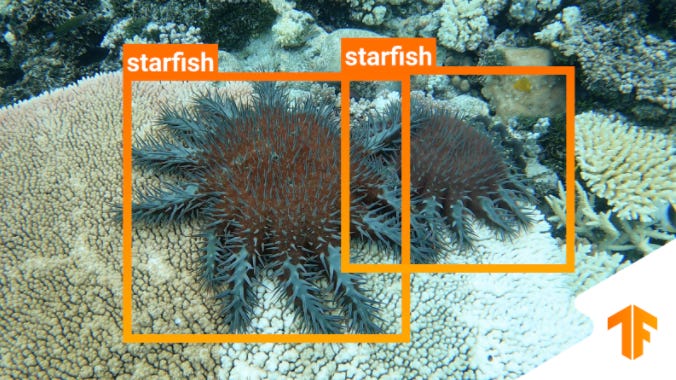

Announcing TensorFlow’s Kaggle Challenge to Help Protect Coral Reefs

This TensorFlow-sponsored Kaggle challenge aims at locating and identifying harmful crown-of-thorns starfish (COTS). This is an amazing opportunity to have a real impact protecting coral reefs everywhere!

This post builds, step-by-step, a from-scratch implementation of a Transformer, the most performant architecture for many NLP applications. It is long but worth it if you want to understand in-depth how transformers work.

This guide walks through the Keras Model class, one of the centerpieces of the framework. Understanding this call is necessary if one wants to build and train their own custom models.

Libraries & Code

OpenFold: A faithful PyTorch reproduction of DeepMind's AlphaFold 2

OpenFold carefully reproduces all of the features of DeepMind’s AlphaFold2. Unlike DeepMind's public code, OpenFold is also trainable and can be trained with DeepSpeed and with mixed precision.

TorchGeo is a PyTorch domain library, similar to torchvision, that provides datasets, transforms, samplers, and pre-trained models specific to geospatial data.

SaLinA: SaLinA - A Flexible and Simple Library for Learning Sequential Agents

Salina is a library extending PyTorch modules for developing sequential decision models. It can be used both for Reinforcement Learning and in supervised / unsupervised learning settings (for instance for NLP, Computer Vision, etc...).

Papers & Publications

PyTorchVideo: A Deep Learning Library for Video Understanding

Abstract:

We introduce PyTorchVideo, an open-source deep-learning library that provides a rich set of modular, efficient, and reproducible components for a variety of video understanding tasks, including classification, detection, self-supervised learning, and low-level processing. The library covers a full stack of video understanding tools including multimodal data loading, transformations, and models that reproduce state-of-the-art performance. PyTorchVideo further supports hardware acceleration that enables real-time inference on mobile devices. The library is based on PyTorch and can be used by any training framework; for example, PyTorchLightning, PySlowFast, or Classy Vision.

Gradients are Not All You Need

Abstract

Differentiable programming techniques are widely used in the community and are responsible for the machine learning renaissance of the past several decades. While these methods are powerful, they have limits. In this short report, we discuss a common chaos based failure mode which appears in a variety of differentiable circumstances, ranging from recurrent neural networks and numerical physics simulation to training learned optimizers. We trace this failure to the spectrum of the Jacobian of the system under study, and provide criteria for when a practitioner might expect this failure to spoil their differentiation based optimization algorithms.

Acquisition of Chess Knowledge in AlphaZero

Abstract

What is being learned by superhuman neural network agents such as AlphaZero? This question is of both scientific and practical interest. If the representations of strong neural networks bear no resemblance to human concepts, our ability to understand faithful explanations of their decisions will be restricted, ultimately limiting what we can achieve with neural network interpretability. In this work we provide evidence that human knowledge is acquired by the AlphaZero neural network as it trains on the game of chess. By probing for a broad range of human chess concepts we show when and where these concepts are represented in the AlphaZero network. We also provide a behavioural analysis focusing on opening play, including qualitative analysis from chess Grandmaster Vladimir Kramnik. Finally, we carry out a preliminary investigation looking at the low-level details of AlphaZero's representations, and make the resulting behavioural and representational analyses available online.